Hijack

Hijack

Told in real time, the series is a high-stakes thriller that follows the journey of a hijacked plane as it makes its way to London over a seven-hour flight while authorities on the ground scramble for answers.

Hijack was shot over four soundstages in the UK featuring a combination of LED configurations and often shooting on multiple stages simultaneously. Operating all four volumes, Lux Machina fused the virtual and physical realms by incorporating advanced technology and innovative solutions, including our system playback technology that transformed LED screens into dynamic, lifelike landscapes.

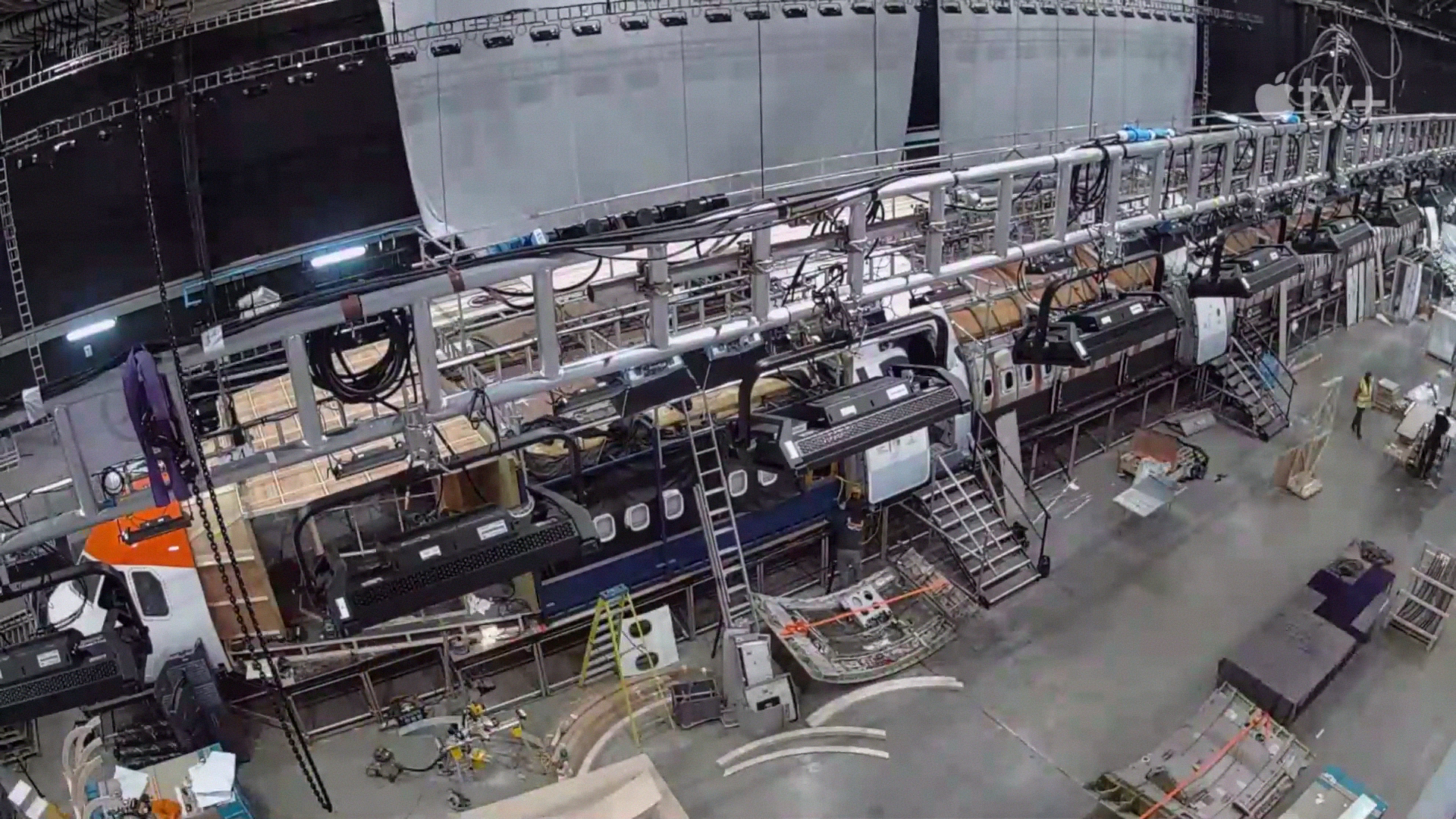

Each stage contained a different set piece used throughout production, including the airplane cockpit (set on a hydraulic motion platform), the plane’s fuselage, the air traffic control room, and the glass-enclosed conference room where the government officials were managing the hijack situation. A fourth swing stage was used for internal airport scenes.

TAKING FLIGHT WITH VAD

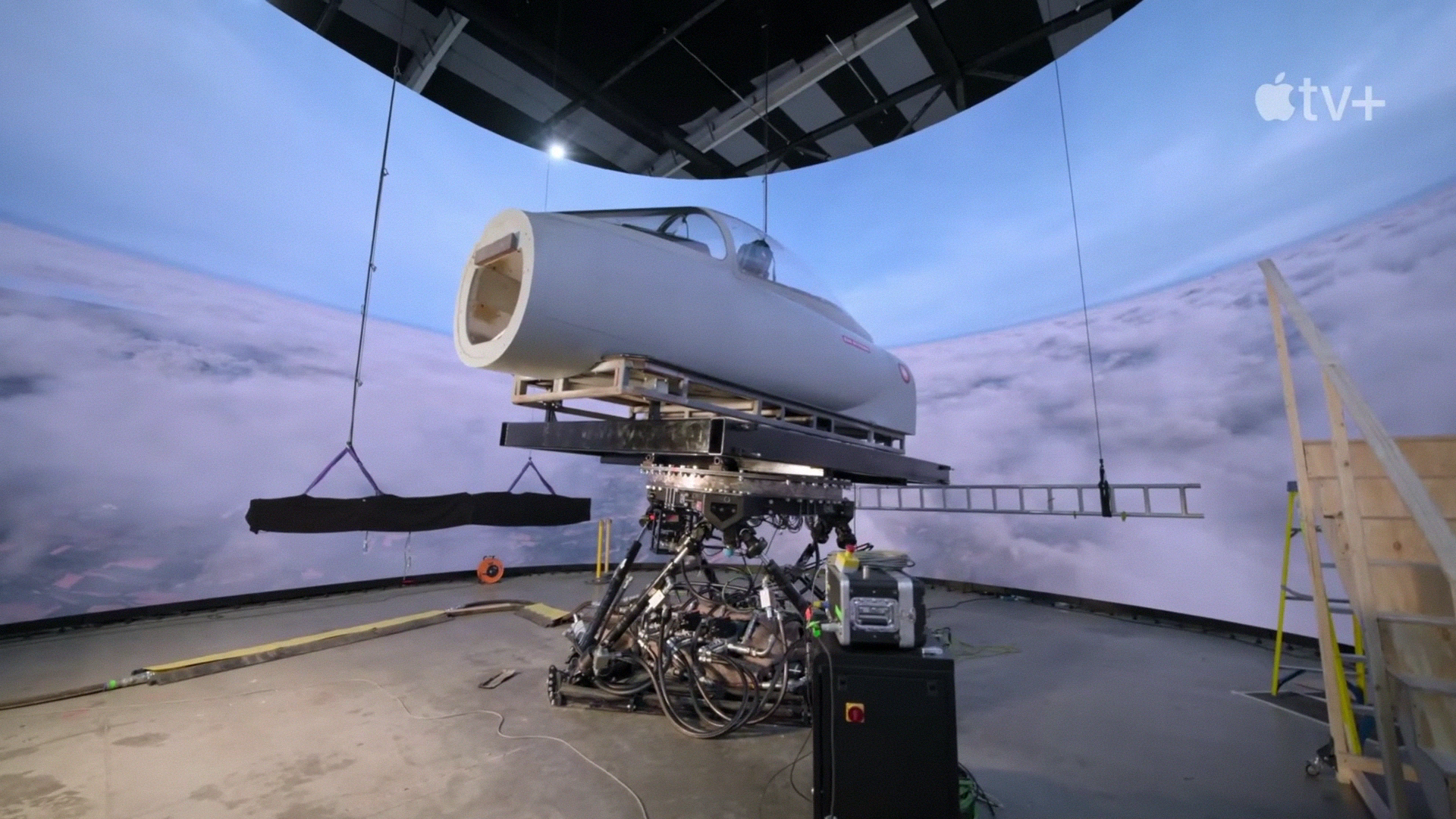

There were two sound stages wholly dedicated to the aircraft itself. One was the cockpit, which was on a fully mechanical gimbal, facing an LED wall, and reacting to what was going on in real-time. The second stage was the fuselage - the main body of the airplane - where the actors and extras playing the passengers and crew members sat for the duration of the filming.

According to cinematographer Ed Moore, the set piece was an actual full-scale Airbus A330, complete with 230 or so seats. LED screens were installed on tracks on either side of the plane, providing moving sky content and giving the actors the illusion that they were in flight and could react accordingly.

To create the illusion of flight, Lux’s Virtual Art Department (VAD) created assets containing an array of digital clouds, each formation hovering above its meticulously crafted landmass - be it land, water, or desert. Lux’s technology provided unprecedented control over these dynamic cloudscapes, enabling the Director and Cinematographer to manipulate them in real-time, adjusting speed, rotation, and direction as required by the script. This innovative approach mirrored the movement of an airplane through the skies and significantly amplified the visual narrative.

“We created an extensive cloud library that could be featured on the LED screens on both sides of the passenger plane’s windows – as well as the cockpit,” said Lux Producer Kyle Olson. “The ability to control these elements in real-time added a groundbreaking layer of realism to Hijack, allowing every scene to come alive with stunning accuracy and detail.”

During pre-production, director of photography Ed Moore had a four-screen flight simulator in his office, complete with cockpit controls. He played it for seven hours, tracking the same route the show’s plane was journeying to get a sense of the lighting. “It gave him a lot of ideas for the type of imagery that he was interested in, and that imagery was provided to us a mood board, so to speak,” said Lux Chief Technology Officer Kris Murray. “Our team could recreate portions of that, or take inspiration from them, to create customized versions of images that Ed could control.”

“On the flight route from Dubai to London, we knew there would be various landscapes, and we knew we did not want the same cloud view the whole time,” explained Moore. “Seventy-five percent of the show takes place inside the plane. Almost everyone has been on one, so if something doesn’t feel real to the viewer, they’ll immediately be taken out of the story. There are visual cues we all recognize when we’re on a flight, like the sun's beams coming in and hitting your TV screen, for example. Little things like that make the plane’s world feel real. It was important for me to immerse the audience in it as much as possible, so when the hijack occurs, they feel like they are in this pressure cooker with the passengers.”

THROUGH THE LOOKING GLASS

For a series where much of the on-the-ground action takes place in a government building’s conference room, four walls and a table can get boring quickly. Production wanted to make the set piece visually stimulating for the audience. The result was creating a room that was “nothing but windows and reflective surface everywhere,” said Olson. The only way to do that was to find that setting in real life or to use virtual production.

“This set piece could easily have been the most expensive location in the entire production - because prime real estate in London with views of Big Ben and the river Thames would cost you an arm and a leg to rent out,” he said.

Also, shooting on location would mean that production would depend on having perfect lighting and weather conditions, “elements you could never control for a shoot of this length and size,” said David Gray, managing director of Lux Machina UK.

Virtual production became a cost-effective way to get the same result and better. The show found a location of the London skyline and shot a master plate from 5 am to 9 pm, which provided a range of lighting options from sun-up to sundown, depending on the time of day the script called for. With that content on the LED wall, no post-production work was needed.

“It would have been an absolute nightmare with a green screen, figuring out how to composite some of the shots,” said Moore. “Instead, we have so many more lighting opportunities because we’re framing the shot with the world on the screen in mind,” said Moore. “Instead of repositioning the actors to suit the background, we’re adjusting the background to suit the actors, getting a whole other level of creative freedom.”

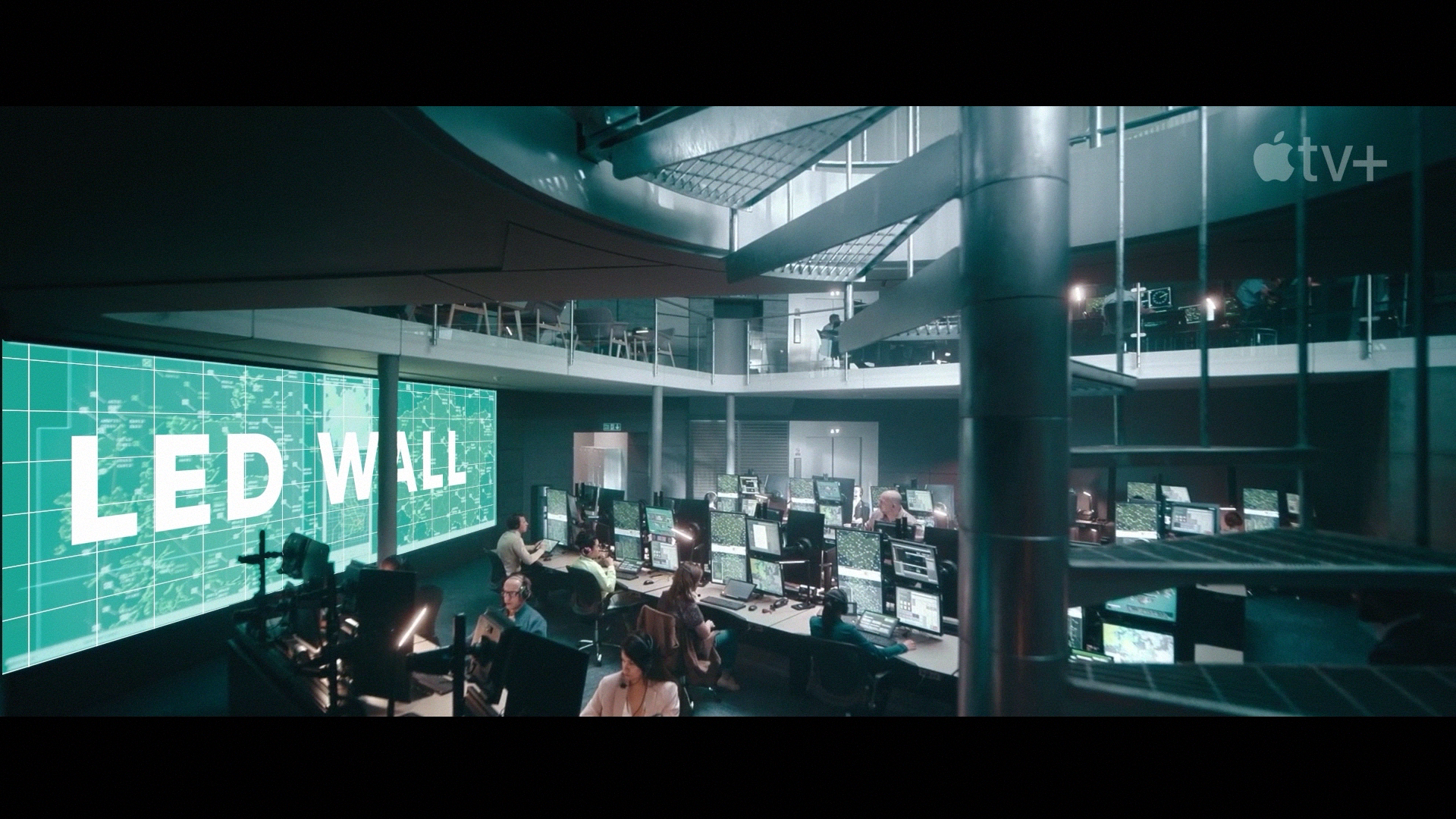

TAKING CONTROL

For the Air Traffic Control Room, the LED screens serve as ATC screens tracking aircraft movements and positions during flight. This was a rare occasion where LED screens served as actual screens in the story. Without high-resolution technology, the images displayed on the screens would be added in post-production, with the actors staring at a green screen during the shoot. The real-time display enabled them to act and react to what they saw on the LED.

TAILORING TECHNOLOGY

One of the toughest challenges was building a workflow that could work with the production timeline while giving creatives enough flexibility without sacrificing quality to achieve the series' desired look and feel. That meant creating a bespoke solution tailored specifically to Hijack’s needs.

“To do that, we had to create some custom plug-ins, custom software, and make some modifications to Unreal Engine,” explained Murray. “We took the 3D workflow we used on large-scale productions like House of the Dragon and The Mandalorian and mashed them with the type of work we’d previously done using 2D plates. That meant building a pipeline that allowed us to export content, in the same format as Unreal's nDisplay, that could be played on a pre-rendered playback system."

The system playback technology was at the core of Hijack's immersive experience, allowing LED screens to display meticulously curated footage of majestic cloudscapes, pinpoint-accurate air traffic maps, and hyper-realistic airport information monitors.

“This offered a profound benefit to the actors: the ability to interact with their surroundings in real-time,” said Olson. “Whether portraying the collective anxiety of hundreds of passengers aboard an aircraft or the intense focus of air traffic control personnel, actors could reference the screens just as they would in the real world. This interactive facet added an undeniable layer of authenticity to the performances, intensifying the narrative's tension and deepening its emotional resonance.